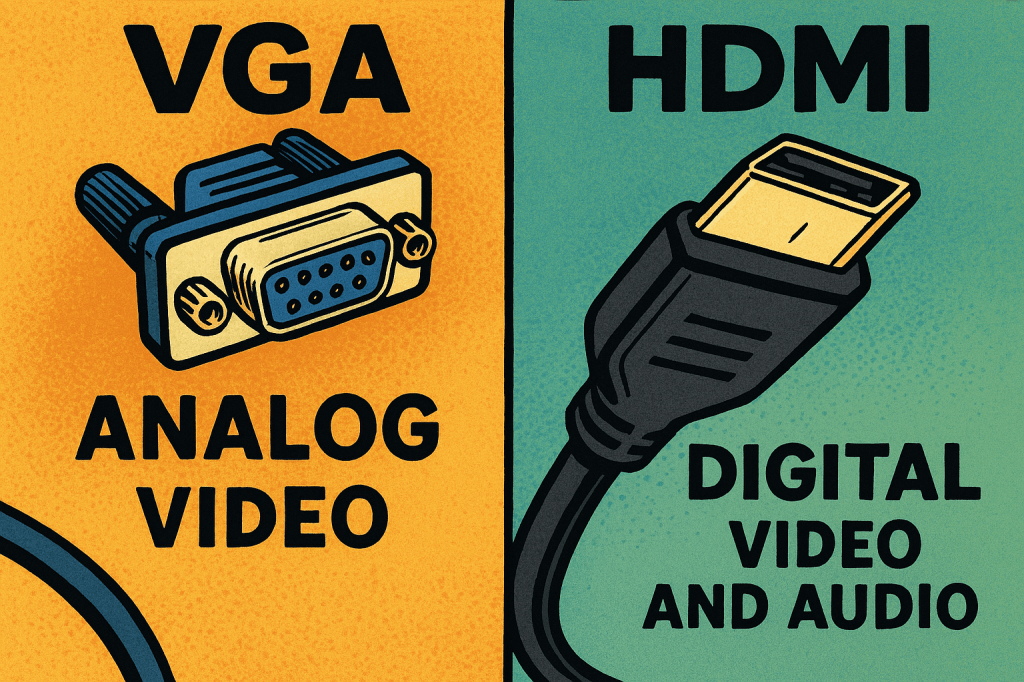

VGA (Video Graphics Array) and HDMI (High-Definition Multimedia Interface) are both used to connect displays to computers, but they differ in signal type, quality, and capabilities.

VGA is an older analog interface introduced in the late 1980s that transmits video only. It uses a 15-pin D-sub connector and is prone to signal degradation over long distances, resulting in blurry or distorted images, especially at high resolutions. It is rarely used on modern devices but may still be encountered in legacy equipment or projectors.

HDMI, in contrast, is a digital interface capable of transmitting both video and audio over a single cable. It supports higher resolutions (up to 8K with HDMI 2.1), encrypted content (HDCP), and additional features like Ethernet over HDMI and ARC (Audio Return Channel).

HDMI is the current standard for TVs, monitors, gaming consoles, and newer laptops. While adapters exist to convert VGA to HDMI and vice versa, signal conversion between analog and digital may require active adapters with external power.

BitcoinVersus.Tech Editor’s Note:

We volunteer daily to ensure the credibility of the information on this platform is Verifiably True. If you would like to support to help further secure the integrity of our research initiatives, please donate here

BitcoinVersus.tech is not a financial advisor. This media platform reports on financial subjects purely for informational purposes.

Leave a comment